SSH vs OpenVPN for Tunneling

Update 28.01.16

I found some sites referring to this post. Below are the common complaints I saw, and my replies:

- I’m criminally bad with setting up OpenVPN, meaning the testing is completely off

I’m not an expert in networking, but I have a working knowledge of it and I spent around 2 work days on messing around with the OpenVPN parameters. This is as good as I got, compared to the SSH setup which required no optimization.

I’ll be delighted to see a better-performing OpenVPN config. Here is how to recreate my testing:- One machine on each AWS region

US-EAST-1,EU-WEST-1 - Size

c3.4xlarge(I think) - Vanilla CentOS 6 OS

- No interface configuration (can be modified if needed)

- All packages installed from CentOS repo

Make sure you show your configuration and your results!

- One machine on each AWS region

- Comparing OpenVPN and SSH tunneling is like apples and oranges

This is nonsense. I had 2 tools to solve my issue. I tuned them both to the bst of my ability and tested them. One outperformed the other, so I chose it.

Also, have a comment section, and I’ll be happy if people with interesting replies drop me a line down there so I can learn something new.

Finally, I fixed my diagrams below.

The Story

I was asked to take care of a security challange - setup Redis replication between two VMs over the internet.

The VMs were in different continents, so I had keep the bandwidth impact to a minimum.

I thought of 3 options:

stunnel, which uses tunnels TCP connections via SSL- SSH, which has TCP tunneling over it’s secure channel (amongst its weponary)

- OpenVPN, which is designed to encapsulate, encrypt and compress traffic among two machines

I quickly dropped stunnel because its setup is nontrivial compared to the other two (no logging, no init file…), and decided to test SSH and OpenVPN.

I was sure that when it comes to speed, OpenVPN will be the best, because:

- The first Google results say so (and they even look credible)

- Logic dictates that SSH tunneling will suffer from TCP over TCP, since SSH runs over TCP

- OpenVPN, being a VPN software, is solely designed to move packets from one place to another.

I was so sure of that, that I almost didn’t test.

I was quite surprised.

Test 1

I only compared speed, since I decided the encryption of both programs will be enough.

My test consisted of this procedure:

- Create a functioning, data-filled Redis instance in

server A, port 6379 - Start an empty Redis instance in

server B, port 6379 - Setup tunneling (according to the method I was testing)

- Execute

redis-cli -p 6379 slaveof <Target port>onserver B - Wait for

MASTER <-> SLAVE sync startedto appear onserver B’s Redis - Wait for

MASTER <-> SLAVE sync: Finished with successto appear onserver B’s Redis - Cleanup

I recorded the time it took server B to go from step 5 to step 6, effectivly measuring the duration of a full replication.

The Redis data set was about 1GB. Not the biggest I’ve ever seen, but enough for my tests.

I played around with a few parameters, and these are my results:

| platform | protocol | compression | duration |

|---|---|---|---|

| OpenVPN | TCP | no | 21m |

| yes | 15m | ||

| UDP | no | 6m | |

| yes | 5m | ||

| SSH | TCP | default | 1m50s |

| no | 1m30s | ||

| yes | 2m30s |

As you can see, SSH beats OpenVPN. By far.

I was surprised to see this, so I did some additional tests, using iperf.

Test 2

My second test utilized iperf, and I left OpenVPN compression on, because disabling it clearly wasn’t helping.

Server A was running the iperf server, using iperf -s.

Server B was running the iperf client, using iperf -c <SERVER ADDRESS> -p <PORT>.

Below is my test summary.

| platform | protocol | encrpytion | speed (Mb/s) |

|---|---|---|---|

| OpenVPN | TCP | BlowFish | 13 |

| AES-256-CBC | 12 | ||

| UDP | BlowFish | 43 | |

| AES-256-CBC | 36 | ||

| SSH | TCP | AWS128-CTR | 91 |

Although the gap is reduced, SSH stil wins. After some helpful hints at ServerFault, I understood why, contrary to public opinion, SSH is faster.

The solution

The difference between SSH and OpenVPN, giving SSH its edge, is on which OSI layer they work.

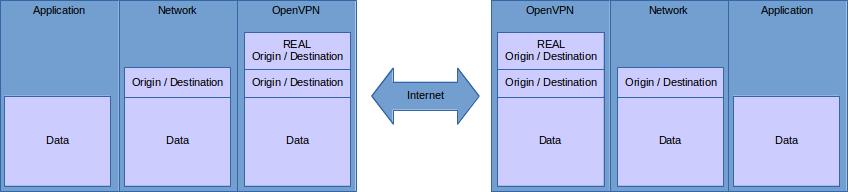

OpenVPN

Being a VPN service, OpenVPN can operate as:

TUN, a level 3 (IP) deviceTAP, a level 2 (MAC) device

Being a network device allows OpenVPN to support diverse protocols (anything over IP with TUN and anything over Eth802.3 with TAP) with diverse destinations (different IP addresses, broadcasts etc.) and diverse ports. However, to do that, it has to preserve the original packet structure, so it has to take most of the original packet, wrap it in its own packet (to encrypt and give it a new destination), and send it to ther other OpenVPN instance, where it’s unpacked.

This generates overhead, like this:

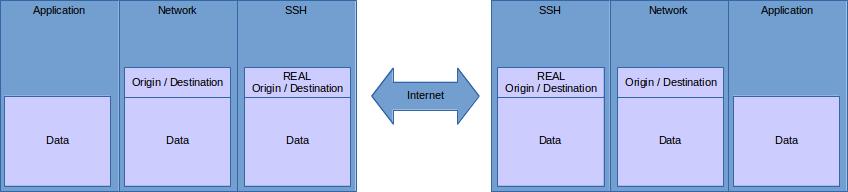

SSH connection forwarding

On the other hand, SSH connection forwarding operates at layer 4 (TCP). Because of that, you can only forward one port (unless you’re using dynamic forwarding, which has its own overhead), and it has to be on TCP/IP. However, because SSH operates at a higher OSI layer, less of the original data has to be preseved, so it has less overhead. It looks like this:

Some SSH netstats

I recorded my socket status when running redis-cli over SSH forwarding.

The redis server is listening on port 6379, and the forwarding is on port 20000.

The commands I used are:

ssh -f <SERVER IP> -L 20000:127.0.0.1:6379 -N

redis-cli -p 20000

I removed the listening sshd socket, because it’s irrelevant.

Before running redis-cli, we can see SSH has an established a tunnel and listening localhost socket on the client

backslasher@client$ netstat -nap | grep -P '(ssh|redis)'

...

tcp 0 0 127.0.0.1:20000 0.0.0.0:* LISTEN 20879/ssh

tcp 0 0 10.105.16.225:53142 <SERVER IP>:22 ESTABLISHED 20879/ssh

...

backslasher@server$ netstat -nap | grep -P '(ssh|redis)'

...

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 54328/redis-server

tcp 0 0 <SERVER IP>:22 <CLIENT IP>:53142 ESTABLISHED 53692/sshd

...

After running redis-cli, we can see the redis socket on the server, originating from sshd

backslasher@client$ netstat -nap | grep -P '(ssh|redis)'

...

tcp 0 0 127.0.0.1:20000 0.0.0.0:* LISTEN 20879/ssh

tcp 0 0 127.0.0.1:20000 127.0.0.1:53142 ESTABLISHED 20879/ssh

tcp 0 0 127.0.0.1:53142 127.0.0.1:20000 ESTABLISHED 21692/redis-cli

...

backslasher@server$ netstat -nap | grep -P '(ssh|redis)'

...

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 54328/redis-server

tcp 0 0 127.0.0.1:6379 127.0.0.1:42680 ESTABLISHED 54328/redis-server

tcp 0 0 127.0.0.1:42680 127.0.0.1:6379 ESTABLISHED 54333/sshd

tcp 0 0 <SERVER IP>:22 <CLIENT IP>:53142 ESTABLISHED 52889/sshd

...

As we can see, SSH creates a loopback port on both client and server, so neither address each other directly.

Thanks to that, this information (client IP/port, server IP/port) doesn’t have to be transferred, saving overhead.

TL;DR

As long as you only need one TCP port forwarded, SSH is a much faster choice, because it has less overhead.